Is your latest song on repeat really sung by Blackpink or Justin Bieber? There’s a shockingly high chance that it’s a deepfake voice clone created to trick you, according to a new study from musicMagpie aptly titled Bop or Bot? The study found an astonishing 1.63 million AI covers just on YouTube. Listeners may not always be able to tell the difference, and it could actually have a financial impact on the artists whose voices are being used for the songs.

The biggest victims of these deepfake tracks are K-pop groups, accounting for 35% of the top twenty most-streamed AI-generated artists. Blackpink is at the top of the list, with more than 17.3 million views of AI-generated content mimicking the group, with an AI cover of ‘Batter Up’ by BabyMonster nabbing 2.5 million views alone. Justin Bieber ranks second on the list at over 13 million views, including his biggest fake hit at 10.1 million views, ‘Nothing’s Gonna Change My Love For You’ by George Benson. Rounding out the top three stolen voices is Kanye West at 3.4 million views for AI-generated tracks, including a cover of ‘Somebody That I Used to Know’ with 2.6 million streams.

There’s’ a more literal theft involved, too. The financial implications of AI-generated music are substantial, according to musicMagpie. The company estimated that the surge in AI-generated content could translate to more than $13.5 million in lost revenue for original creators. That’s about $500,000 lost for Blackpink, while Bieber and West lost out on $202,964 and $130,000, respectively.

Voice Tricks

Even being deceased can’t save artists from AI theft, as the AI ghost of Frank Sinatra’s 8.9 million views and Freddie Mercury’s 3.55 million views can attest. As for fictional unlicensed voices, there’s an unexpected appeal to SpongeBob SquarePants performing songs and collecting 10.2 million views of the yellow cartoon character. His biggest hit? Don Maclean’s ‘American Pie.’

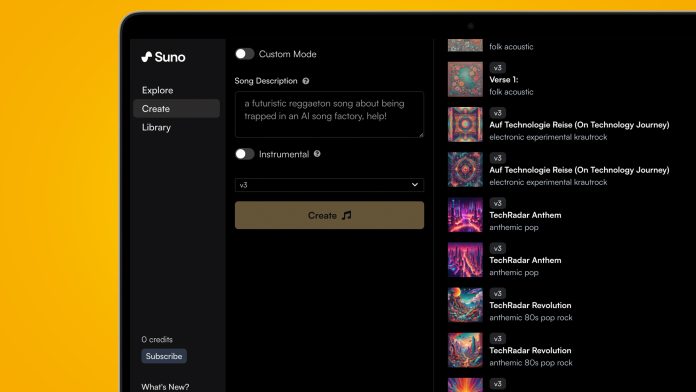

Part of the issue is that people aren’t good at telling AI-generated music from music made by humans. In a further study, musicMagpie found that 72% of participants were sure they could tell an AI-produced song from one made by humans, but 49% couldn’t do so. And it’s not an age thing; Gen Z participants were actually the most easily fooled. All of this is fodder for ongoing legal battles facing AI music startups like Suno and Udio overusing unlicensed material to train their AI models. If the Recording Industry Association of America (RIAA) and music labels can successfully argue that there’s a real monetary loss involved, they’ll likely have a stronger case against the AI model developers.

“These findings highlight a growing challenge in the music industry: as AI technology becomes more sophisticated, music lovers across multiple generations are struggling to discern between what is real and what is artificially created,” the study’s authors point out. “If nearly half of listeners can’t tell the difference between a human artist and an AI, what does this mean for the value of human creativity? How will this affect the way we create, perceive, and appreciate music in the years to come? These are questions that the industry must grapple with as AI continues to evolve.”

You might also like…

- Suno takes a ‘What, me worry?’ approach to legal troubles and rolls out AI music-generating mobile app

- AI music makers face recording industry legal battle of the bands that could spell trouble for your AI-generated tunes

- YouTube can spot those AI-produced faces and music tracks you’re seeing all over